The term „artificial intelligence“ includes self-learning systems that are used in a variety of fast-developing technologies. For example, AI can be used to increase the efficiency of production plants and improve the prevention of diseases. With the progress of digitalisation, AI will play an important role in the economy and society. However, in addition to the benefits, the use of AI systems also creates challenges and risks that should be kept under control with regulation. A company’s future strategy should therefore take into account the development of the legal framework for AI.

Artificial intelligence in Europe

Ulrich Herfurth, Lawyer in Hanover and Brussels

Sara Nesler, Mag. iur. (Torino), LL.M. (Münster)

Development within the EU

The European Union published a White Paper in 2020 that expands the AI strategy presented in 2018 in two main directions. First, it will push AI development and research by making resources available and increase AI competences within the EU thanks to greater cooperation between member states. In addition, the EU wants to promote citizens‘ confidence in the new technologies and also ensure access to AI for small and medium-sized enterprises. On the other hand, the need for global regulation at the European level has been recognised.

As a result, on 21.04.2021, the EU Commission presented a proposal for a regulation to establish harmonised rules for artificial intelligence (AI Regulation). The main target of the draft is to regulate the use of AI systems in the EU in such a way that the risks of the technology are minimised without complicating or limiting its development.

Scope of the Regulation

The AI Regulation provides for a broad definition of AI. This makes the regulation applicable not only to modern machine-learning systems, but also to traditional hard-coded software based on logic and statistics. The entire value chain of AI systems is affected, both in the private and the public sector. The planned AI regulation is therefore aimed at providers, developers and manufacturers of AI systems, but also at importers, traders and users (excluding consumers).

Measures by risk level

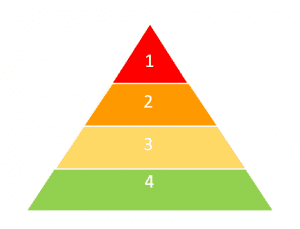

In order to fully regulate different AI systems and applications without setting unnecessary limits to the development and use of the new technologies, the regulation follows a risk-based approach.

The draft defines four levels of risk, which will be modified or extended as needed in the future.

(1) Unacceptable risk

AI systems that create an unacceptable risk will be banned. These include systems that manipulate people’s behaviour or exploit physical and psychological weaknesses, which can cause physical or psychological harm. Examples include toys that cause young children to behave dangerously, so called “social scoring systems” that can lead to discrimination by authorities, and systems that use biometric identification (exceptions include identification and tracking of a criminal offender or suspect).

(2) High risk

This risk level covers two main groups of applications: firstly, security systems and components (e.g. robotic surgery applications or automotive security components), and secondly, systems used in sensitive areas that may cause fundamental rights violations. This also includes critical infrastructures, access to schooling or education, professional recruitment procedures, law enforcement, the administration of justice, as well as important private and public services, such as credit rating.

For high-risk AI systems, the regulation contains strict requirements, including on the risk assessment of systems, quality of data sets, traceability of operations and adequate human oversight. To ensure compliance, placing a high-risk AI system on the market will require a positive (internal or external) conformity assessment, registration in a European database established for this purpose and the use of a CE conformity symbol.

In addition to the obligations for suppliers and product manufacturers, the planned AI Regulation also provides for supervisory, reporting and warranty obligations for importers and distributors.

(3) Low risk

AI systems that require special transparency obligations are rated as low risk. For example, the use of chatbots and exposure to so called deep fakes (manipulated or generated content that is perceived as authentic people, facts or objects) should be made public in the future. The same applies to systems that detect emotions or make biometric categorisations.

(4) Minimal risk

The majority of AI systems, such as video games or spam filters, pose only minimal risk according to the AI Regulation and should accordingly be free to use within the framework of the law already in force.

Recent issues for discussion

However, it is questionable whether the Commission will succeed with the draft regulation in creating a clear legal framework that fully protects fundamental rights without making the development and use of AI systems excessively difficult.

Uncertainty in the law

Terms such as „psychological damage“ and „psychological weakness“ are not defined in detail. This is important both for business and for the effective protection of citizens: it is not clear how far the use of nudging can go without being regarded as unacceptable.

Extensive requirements

Suppliers and product manufacturers are criticising the requirements for high-risk systems and their verification as too complicated. At the same time, specialists complain that the draft takes too much economic interests into account. High-risk systems can cause serious negative consequences, and it is therefore not sufficient that an internal conformity assessment should be sufficient for most high-risk systems. On the other hand, suppliers appreciate this approach, as it better protects intellectual property and trade secrets.

Practicability in practice

It is also unclear how some regulations can be implemented in practice. Human supervision for automated assessments and decisions, for example, is much more broadly regulated than in the GDPR. According to the AI Regulation, the formal involvement of a human in the decision is not sufficient. Instead, humans with an understanding of the system and aware of the possible effects of bias in the automation should be able to decide independently whether to use the AI system or to disregard the result.

Currently, this is often not the case when assessing the credit rating of a customer. It is true that a human assessment is carried out alongside the algorithmic assessment, and the final decision on whether to grant a loan is usually made by a human being. Nevertheless, the algorithmic assessment plays a central role in the calculation of the interest rate. The clerk is bound to the „system“ there, and also cannot fully comprehend which criteria have gone into the assessment. For reasons of data protection, not all criteria that have gone into the score are disclosed to the bank.

Automated assessments and decisions play a growing role in many areas of the economy and society. Greater transparency of processes is therefore desirable for AI to serve and gain the trust of humans – as desired by the Commission. Nevertheless, it must be considered how and to what extent the rules can be applied in practice without suppressing the supposed advantages of AI, such as time savings and objectivity, by extensive human control and correction. If no balance is found, the role of the human decision-maker will remain largely on paper, as with the General Data Protection Regulation. Finally, the decision to bypass algorithmic assessment could become a liability issue. In practice, many human decision-makers would not want to take on the responsibility for this.

Liability and enforcement

Who should be liable for damage caused by AI-controlled machines and systems that are not covered by contractual liability is left open in the proposed regulation. An example of this is damage caused by a malfunction of an autonomously driving vehicle. Thus, the draft lacks the necessary systematics at this point. (More on this in the Compact „Artificial Intelligence and Law„, Ulrich Herfurth, January 2019).

The regulation does not yet provide any special rights for those who are assessed by AI systems or whose behaviour is controlled. Enforcing the law in the field of artificial intelligence is particularly difficult because the processes are often not transparent. However, the draft does not address reversals of the burden of proof or alleviations of causality.

Implementation of the Regulation

For the implementation of the regulation, the establishment of a European AI Committee with mainly advisory functions is planned. The new authority is to consist of the competent authorities of the member states and the European Data Protection Supervisor, following the model of the European Data Protection Committee. Compliance with the requirements for high-risk systems is to be checked by the market surveillance authorities.

Violations of the AI Regulation are punishable by heavy fines. These can reach up to 30 million euros or 6 % of the worldwide annual turnover. It is therefore very important for companies to observe legal developments and to plan strategically accordingly.

Outlook

The draft is still at the beginning of the legislative process. It is still unclear whether possible changes will favour the development of AI and the associated economic interests or the protection of citizens‘ fundamental rights. Both interest groups are lobbying intensively.

The AI regulation is expected to come into force in 2024.

+++

See also Compact „Artificial Intelligence and Law„,

Ulrich Herfurth, January 2019.