Hanover, 01.10.2024 | It is anticipated that the AI market in Europe will reach a value of approximately 42 billion euros by the end of 2024, representing a near doubling of the market value from 2020. The market is then forecast to grow further, reaching a value of over 190 billion euros by 2030 (Statista Analysis). In 2021, just 8% of European companies were utilising AI technology. However, experts believe this figure had doubled by early 2023. In certain sectors, AI adoption could be even more significant, with up to 75% of companies utilising AI solutions. The accelerated pace of development is compelling many companies to maintain continuous engagement with emerging technologies and their associated regulations.

European legislators are facing challenges in keeping pace with the rapid developments in AI. In 2021, we examined the initial draft of the Artificial Intelligence Act (AI Act) in our Compact on ‘Artificial Intelligence in Europe’. The aim of the Regulation was to provide a framework for the responsible use of AI systems in the EU, with a view to minimising the associated risks. Following the introduction of ChatGPT in November 2022, it became evident that the draft from April 2021 was already out of date. The final version of the AI Act was published on 12 July 2024 and came into force on 1 August 2024.

Artificial Intelligence in Europe: The AI Act

Ulrich Herfurth, attorney at law in Hanover and Brussels, Sara Nesler, Mag. iur. (Torino), LL.M. (Münster)

Scope of application of the AI Act

Definition of AI system

The term ‘AI system’, which is the central element of the Regulation, was the subject of intense debate during the legislative process, as many parties feared that it was vague. Ultimately, an AI system was defined as „a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments”.

It is to be welcomed that this definition corresponds almost literally to the OECD definition, which was updated in 2023. Ultimately, what distinguishes an AI system from software is that AI has a degree of independence from human intervention and the ability to operate without human intervention. This criterion was already included in the April 2021 draft. If there is any doubt as to whether a system is AI, it is recommended that, as a precaution, it should be assumed that it is AI.

Personal scope of application

The entire value chain of AI systems is affected, both in the private and public sectors. The obligations of the AI Act are primarily aimed at the providers of AI systems, partly at the operator and only selectively at the importer, distributor, authorised representative, the notified body (an independent organisation responsible for the assessment and certification of AI systems) or the end user.

Geographical scope of application

To ensure comprehensive protection, the scope of the Regulation also extends to providers and operators of AI systems established in a third country or located in a third country if the output generated by the AI system is used in the Union (Art. 2 para. 1 lit. c AI Act).

This is intended to prevent the import of illegal AI systems, even if the specific location of an AI system cannot be reliably determined by outsiders or can be easily relocated. In practice, however, it is very difficult to enforce without comprehensive monitoring (which is problematic in terms of fundamental rights), which means that third-country providers are likely to circumvent the EU rules.

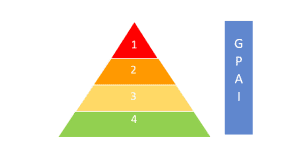

Risk-based measures

In order to comprehensively regulate different AI systems and applications without creating unnecessary barriers to the development and use of new technologies, the Regulation follows a risk-based approach. Independently of the four risk levels, the new draft has now introduced the category of ‘General Purpose Artificial Intelligence Model’ (GPAI model).

(1) Prohibited AI systems (Art. 5 AI Act)

AI systems that pose an unacceptable risk are prohibited. This includes systems that manipulate people’s behaviour or exploit physical or mental weaknesses to induce people to behave in a harmful way, e.g. toys that encourage young children to behave dangerously. The extent to which AI-driven social media feeds and personalised advertising will be covered is still unclear. Social scoring and biometric identification systems are also banned (with exceptions, including for the identification and tracking of criminals or suspects).

(2) High risk AI systems (Art. 6-49 AI Act)

This risk level covers two main groups of applications: safety systems and components, e.g. applications in robot-assisted surgery or safety components in motor vehicles or toys; and systems that are used in sensitive areas and may lead to violations of fundamental rights. These include critical infrastructure, access to education and training, recruitment procedures, law enforcement, the administration of justice and essential private and public services such as credit scoring. AI systems that do not pose a significant risk to specific legal interests and do not have a significant impact on decision-making processes will not be classified as high-risk systems. This applies in particular to AI applications that only perform preliminary tasks that are subsequently supplemented by a human decision. In practice, this exception and its (difficult) demarcation will be of great importance.

The high requirements for high-risk AI systems, which were already set out in the first draft, have hardly changed in the course of the legislative process. The strict requirements concern, among other things, the risk assessment of systems, the quality of data sets, the traceability of processes and appropriate human supervision. To ensure compliance, a positive conformity assessment (internal or external), registration in a European database set up for this purpose and the affixing of a CE mark are required before a high-risk AI system can be placed on the market. The fundamental rights impact assessment provided for in Art. 27 AI Act is new.

(3) AI systems with limited risk (Art. 50 AI Act)

AI systems with limited risk are only subject to low transparency requirements. These vary depending on the application. For example, the use of chatbots and exposure to deep fakes (manipulated or generated content that is perceived as authentic people, facts or objects) must be disclosed in future. The same applies to systems that recognise emotions or perform biometric categorisations.

(4) Minimal risk

The majority of AI systems, such as AI-based recommendation systems or spam filters, do not fall into any of the above risk categories and are not subject to regulation. The AI Act only provides for the creation of codes of conduct for such systems, compliance with which is voluntary.

AI models with general purpose applications (GPAI models)

The regulation of GPAI models in the AI Act was a response to the introduction of ChatGPT. It was recognised that such general-purpose tools would fall through the cracks of the AI Act’s risk approach.

Definition

GPAI models are defined as ‘AI models that display significant generality, are capable of competently performing a wide range of distinct tasks and that can be integrated into a variety of downstream systems or applications’.

The AI Act does not include a definition of ‘AI model’. If ChatGPT is asked about the difference between an AI system, an AI model and an AI application, and what ChatGPT is, it will tell you: ChatGPT is an AI application that uses a specific model (Generative Pre-trained Transformer) and is operated as part of a comprehensive system to provide its functionality.

Again, in line with the general risk-based approach of the Regulation, a distinction has to be made between ‘GPAI models’ and ‘GPAI models with systemic risk’: A systemic risk within the meaning of Art. 51 para. 1 AI Act exists if a GPAI model has capabilities with a high degree of effectiveness or is classified as equivalent by the Commission. A high degree of efficiency is assumed if the training effort is more than 10^25 FLOPS.

Obligations

All GPAI models placed on the market are generally subject to documentation and design requirements, as well as requirements to comply with EU copyright law, particularly with regard to text and data mining. This does not apply to GPAI models that are released under an open source licence and are not GPAI models with systemic risks.

Providers of GPAI models with systemic risk must meet additional requirements. These include conducting a model assessment, tracking, documenting and reporting serious incidents and remedial actions to the (newly created) AI Office, and ensuring an appropriate level of cybersecurity and physical infrastructure. In addition, potential ‘systemic risks at Union level’ need to be assessed and mitigated.

Outlook

The implementation deadlines for the Regulation will be phased in between February 2025 and August 2027. Companies affected by the AI Act should therefore familiarise themselves with the new rules immediately and in detail. In doing so, they will quickly realise that the Regulation has been adopted under great time and result pressure. This is reflected in a large number of undefined terms that create considerable legal uncertainty and make implementation difficult.

The Commission now has 12 months to draw up guidelines for the practical implementation of the Regulation (Art. 95 AI Act). However, the implementation deadlines run independently of this, so there is a risk that some requirements will have to be implemented before they are sufficiently concrete, and product development cannot be controlled in time.

The Commission must pay particular attention to the needs of small and medium enterprises, including start-ups (Art. 95 AI Act). However, this has hardly been taken into account in the text of the Regulation; instead, the EU is once again placing a disproportionate burden in terms of human and financial resources on small companies by treating them on an equal footing with the tech giants.

+ + +